Department of Computer Science, Stony Brook University

Generic visual object tracking is difficult due to many challenge factors (e.g., occlusion, blur, etc.). Each of these

factors may cause serious problems for a tracker, and when they work together can make things even more complicated. Despite a great

amount of efforts devoted to understanding the behavior of trackers, reliable and quantifiable ways for studying the per factor

tracking behavior remain barely available. Addressing this issue, in this paper we contribute to the community a tracking diagnosis

toolkit, TracKlinic, for diagnosis of challenge factors of tracking algorithms.

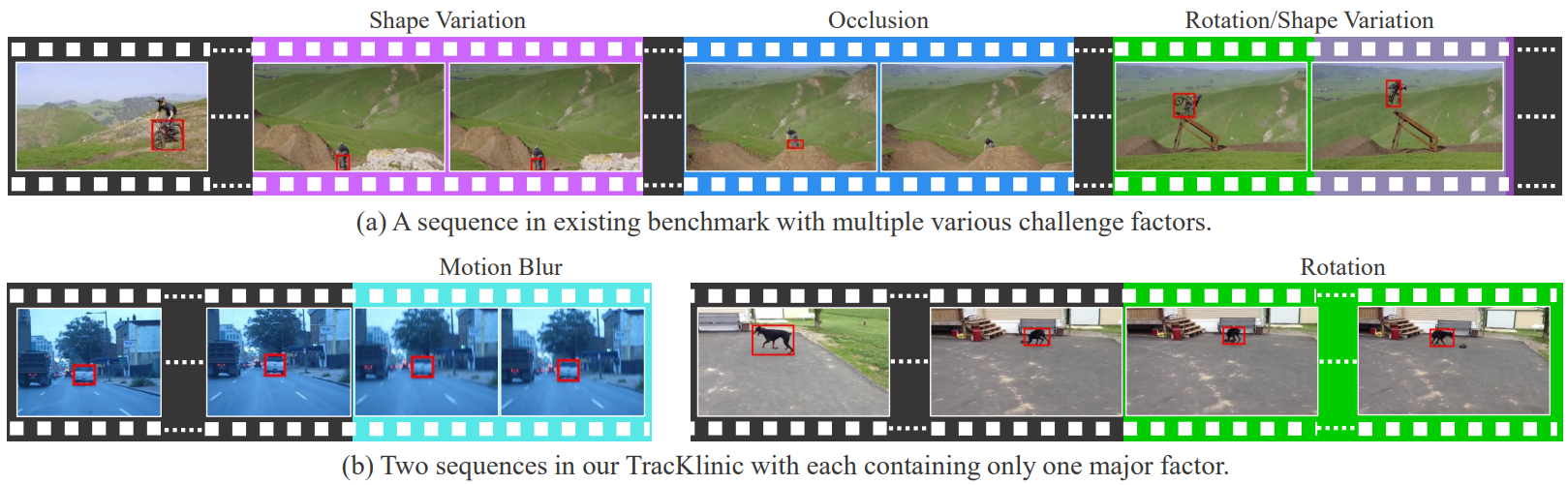

TracKlinic consists of two novel components focusing on the data and analysis aspects, respectively. For the data

component, we carefully prepare a set of 2,390 annotated videos, each involving one and only one major challenge factor (see Figure 1).

When analyzing an algorithm for a specific challenge factor, such one-factor-per-sequence rule greatly inhibits the disturbance from other factors and

consequently leads to more faithful analysis. For the analysis component, given the tracking results on all sequences, it investigates

the behavior of the tracker under each individual factor and generates the report automatically. With TracKlinic, a thorough study is

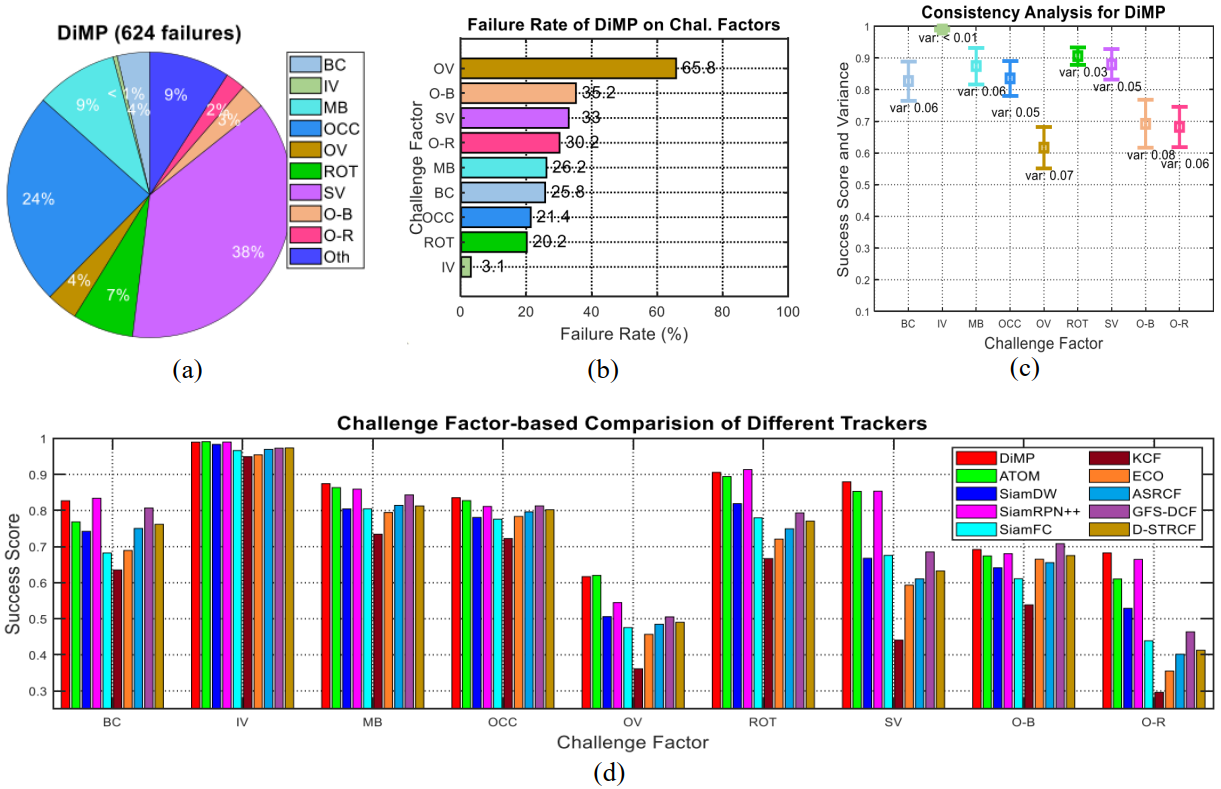

conducted on ten state-of-the-art trackers on nine challenge factors (including two compound ones). The results suggest that, heavy

shape variation and occlusion are the two most challenging factors faced by most trackers. Besides, out-of-view, though does not happen

frequently, is often fatal. By sharing TracKlinic, we expect to make it much easier for diagnosing tracking algorithms, and to thus

facilitate developing better ones.

Figure 1. Comparison between existing tracking benchmark LaSOT and our diagnosis benchmark TracKlinic. Note that, the per frame factor annotations in image (a) are labeled by us, and the original benchmark only provides global factor annotations.

We show a diagnosis example of state-of-the-art DiMP on TracKlinic, as shown in Figure 2.

Figure 2. (a): The percents of failures. (b): Failure rates. (c): Consistency measurement. (d): Challenge factor-based evaluation.

Heng Fan, Fan Yang, Peng Chu, Yuewei Lin, Lin Yuan, and Haibin Ling,

"TracKlinic: Diagnosis of Challenge Factors in Visual Tracking,"

IEEE Winter Conference on Applications of Computer Vision (WACV), 2021.

Paper

Dataset

Evaluation Results